Imagine this: You are at your office desk, headphones on, drowning out the usual hum of conversations. A co-worker approaches and asks you a question. Instead of removing your headphones and saying, “What?” you clearly hear their voice while the distant water-cooler chatter remains muted. Or picture yourself in a bustling restaurant, effortlessly tuning into your table’s lively conversation while the background noise fades into oblivion”. This futuristic scenario is no longer just a dream. Researchers at the University of Washington (UW) have developed a cutting-edge headphone prototype. It allows users to create a personalized “sound bubble.” This technology uses artificial intelligence (AI) to selectively amplify sounds within a customizable radius while muting distractions beyond it.

The Revolutionary “Sound Bubble” Technology

The breakthrough system enables listeners to define a sound radius between 3 to 6 feet around them. Voices or noises outside this bubble are reduced by an impressive average of 49dB (decibels), equivalent to the difference between a vacuum cleaner and rustling leaves. Meanwhile, the voices or sounds inside the bubble are slightly amplified for crystal-clear clarity.

How Does It Work?

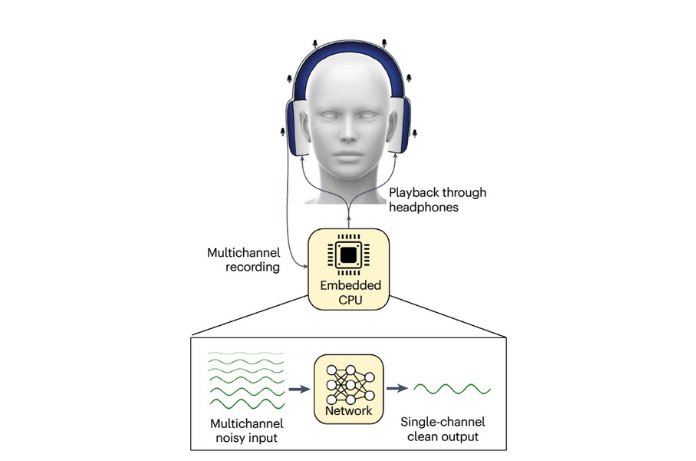

Using commercially available noise-canceling headphones equipped with six small microphones along the headband, this technology employs advanced AI algorithms to track the exact timing of sound reaching each microphone. The onboard computer processes this data in real time within just 8 milliseconds distinguishing between sounds inside and outside the bubble.

Senior researcher and UW professor Shyam Gollakota, who led the project, explained the concept: “Humans aren’t great at perceiving distances through sound, especially in environments with multiple sound sources. Our AI system learns the distance of each sound source in a room and processes this data in real-time on the headphones, making it possible to focus on nearby conversations even in loud environments.”

From Research to Reality: The Journey of Development

Creating this “sound bubble” was no easy feat. Researchers needed a distance-based dataset to train the AI, but such a dataset didn’t exist. To solve this, they attached the headphones to a mannequin head placed on a robotic platform, which rotated while playing sounds from various distances. This process collected crucial data in 22 real-world environments, including offices and living rooms.

The system’s success stems from two key factors:

- Head Reflection: A user’s head naturally reflects sounds, helping the neural network differentiate audio based on distance.

- Sound Frequencies: Human speech and other sounds comprise multiple frequencies. The AI compares the phase differences of these frequencies to calculate their distance.

Beyond Current Technology: Why It’s a Game-Changer

Existing headphones, like Apple’s AirPods Pro 2, can amplify voices in front of the wearer by tracking head movement. However, these rely on direction rather than distance, making them less versatile. For example, they cannot amplify multiple nearby voices simultaneously, lose functionality if the user turns their head, and struggle to reduce noise from the same direction as the amplified sound.

The UW prototype eliminates these limitations by focusing on distance-based sound processing. This innovation allows the system to work seamlessly, regardless of head movement, and isolates sounds more effectively.

Looking Ahead: Expanding the Applications

Currently, the technology is designed to function indoors, as outdoor environments present additional challenges like inconsistent noise levels. However, the research team is already exploring ways to adapt the system for hearing aids and noise-canceling earbuds. This transition will involve rethinking microphone placement to fit smaller devices without compromising performance.

“Our earlier work assumed we needed significant distances between microphones to extract sound distances,” said Gollakota. “What we demonstrated here is that we can achieve this with just the microphones on the headphones, something that surprised us and opened up new possibilities.”

Real-World Potential and Future Plans

This groundbreaking technology holds immense potential for individuals in various settings, from office workers and students to restaurant patrons and commuters. Imagine using noise-canceling earbuds that let you hear your friend clearly in a crowded subway or attending a meeting with perfect clarity in a noisy cafe.

The researchers have also made the code for their proof-of-concept system publicly available, inviting others to refine and expand upon their work. To bring this innovation to market, the team is launching a startup dedicated to commercializing the “sound bubble” concept.

A Sound Revolution is Here

The University of Washington’s “sound bubble” headphones promise to redefine how we interact with sound in noisy environments. By harnessing the power of AI and innovative design, they allow users to focus on what truly matters while tuning out the chaos.

As Gollakota puts it, “Creating sound bubbles on a hearable has not been possible until now.”

This technology not only addresses a practical need but also sets the stage for a new era in personal audio devices. The next steps in this journey will likely include advancements for outdoor use and integration into compact devices like earbuds and hearing aids.